Virtual Live Concert • Virtual Production • Unreal Engine

MIYAVI Synthesis Level 5.0:

Creating a Virtual Live Event

Amazon Music Japan hosted a free live-streamed performance on their Twitch channel on Monday, December 28th. The stream featured songs from Miyavi’s latest albums, “Holy Nights” and “No Sleep Til Tokyo”, and debuted ground-breaking technology following an international collaboration with directors Annie Stoll and Dyan Jong, Japanese production companies Mothership Tokyo and CyberHuman, and Pyramid3.

Because the project was developed during international quarantine, the crew pushed the bounds of collaboration: almost all of the artists involved worked in tandem from their own homes across Los Angeles, New York, Tokyo, and Italy. In moving from physical to virtual production, the team utilizes significantly fewer resources and reduces the damage caused by noise, light, and physical pollution, and seeks to strengthen the conversation around the need for change at every level.

The Show.

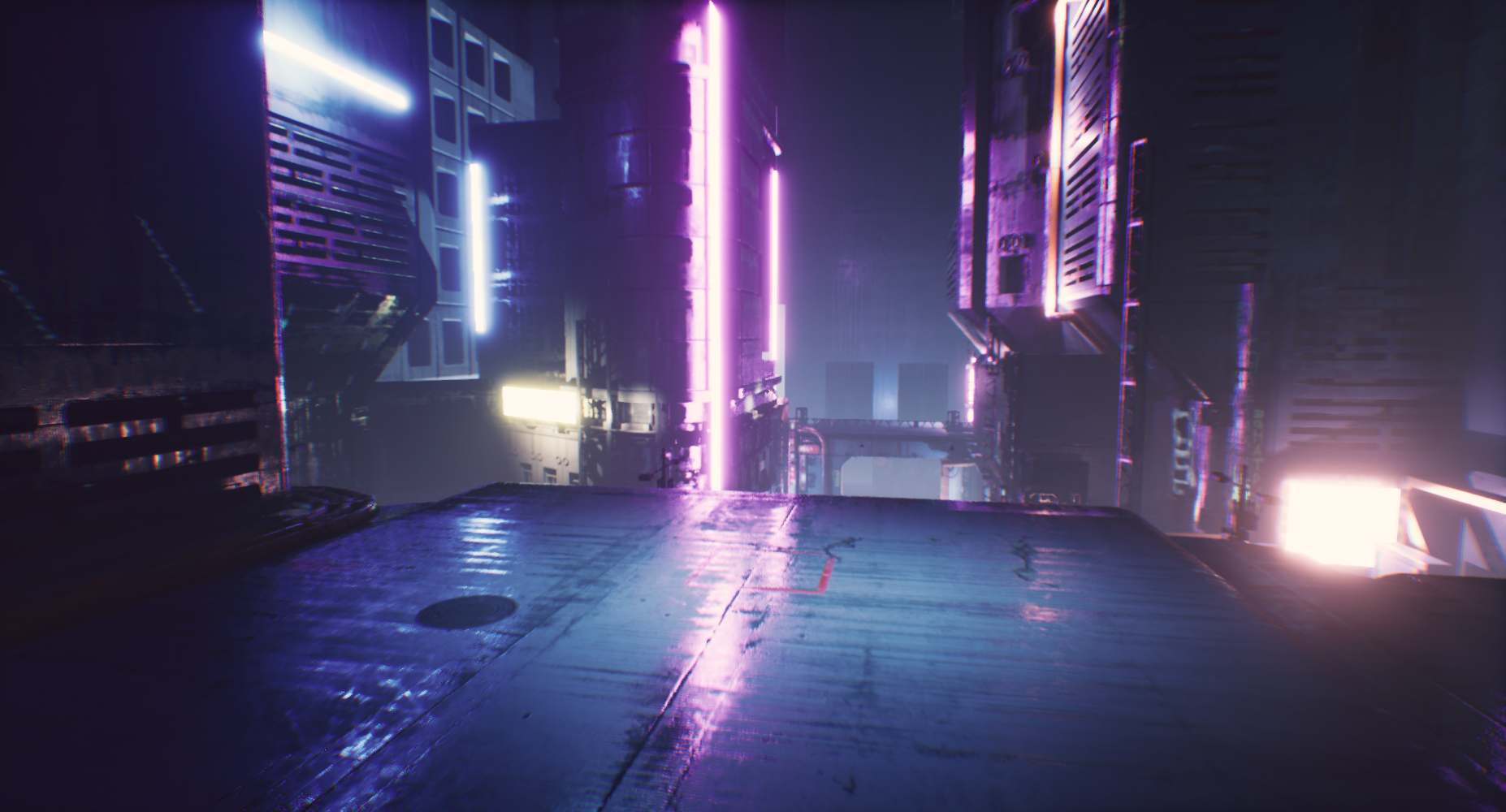

Miyavi appears immersed in a burning city. As it darkens turns to ashes around him, he rises using his inner strength & transforms into an island moment. A quiet moment of concentration leads to fragile new growth & dreams for a better world. After chatting with the audience and calling for their support, MIYAVI is able to rally them with him to a better future.

The show was also streamed to Amazon Music’s official Twitch channel.

The Why?

As a Goodwill Ambassador for the United Nations High Commissioner for Refugees, Miyavi wanted to utilize his position to drive attention toward global issues of climate change, the refugee crisis, and educational and financial disparity throughout the world-- issues further exacerbated by the covid-19 pandemic. A live stream felt like the best way to engage with his fans in real-time through conversation, chat and creative. We based the story around that.

In Miyavi’s own words: "This year, the unprecedented spread of diseases has transformed the global economy we had become comfortable in--as a result, it has become a year for musicians to reconsider the message we convey in our music. Climate change, refugee issues, hunger, poverty, inequality, and pandemic: look around. The world is on fire. How can we deal with the global problems we face? How can we commit to the future of the planet through music and art? There may be a limit to what we can do, but we may find a new way of life by fusing with technology.”

Narrative Structure

A lot of thought went into how to structure the livestream. Rather than performing one song after another, we wanted to create a narrative experience that made use of our ability to switch between virtual sets on demand. We made use of three narrative tools throughout the show:

Audience interactivity (via Twitch chat) allowed for segments of live conversation with fans,

Topical monologues that gave Miyavi space to push the message of the show,

Set progression allowed us to build out a basic story that followed Miyavi’s climate emergency narrative and created a visual arc for the livestream to anchor around.

We split the narrative of Miyavi’s journey through three acts; today’s apocalyptic landscape (anxiety for the future), an isolated island where change is catalyzed from his will, and finally, a vibrant world of healing where change can occur.

Virtual Sets

All sets in synthesis were built in Unreal Engine like you would a video game level. Due to the immense processing power required to run the sets, live-key, composite and render the final image we had to limit our sets to the spatial dimensions of the physical studio. This meant that sets became theatrical - an interesting creative challenge. Our core tool was Zero Density’s Reality Engine, a custom version of Unreal Engine. The software allowed us to match the real and virtual world. Everything from camera, visual effects to lights where matched directly.

From Stage to World

1. Miyavi in CyberHuman's studio in Tokyo, Japan.

2. Our Unreal Engine game level makes up the 3D set with custom visual effects (in this case a complex particle system).

3. The camera fed into Reality Engine to key and track the space in real-time, delivering a live feed sent to Twitch.

4. The final composited image - rendered in real-time - with virtual set, visual effects, camera tracking and lighting matching.

Matching Physical and Virtual

CyberHuman sent us a 3D scan of their entire studio space with a reference human. The model could then be used in Unreal Engine at a 1:1 scale to match the virtual set with the physical one. This allowed us to set the correct scale when building environments, match light positions to real-world locations and define the moveable space Miyavi could use in our virtual sets.

An early test of the virtual island set without the 3D scan of the studio. You can see how the sand patch was made to fit the center of the green-screen studio.

Live Effects

Live events thrive when effects and stage design moves and reacts to the music. Our goal was to replicate the energy of a concert, while adding elements that could never be achieved (or at large cost) on a real stage. Complex visual features that take expensive tech on a physical stage could be achieved with little effort on a virtual one.

Each set included a selection of real-time controls that triggered or manipulated real-time visual effects. Our team in Tokyo was then able to control then (VJ) on-site while Miyavi performed: from timed scene changes, particle system movement, pre-made animations, to triggering explosions.

Brainwave EEG

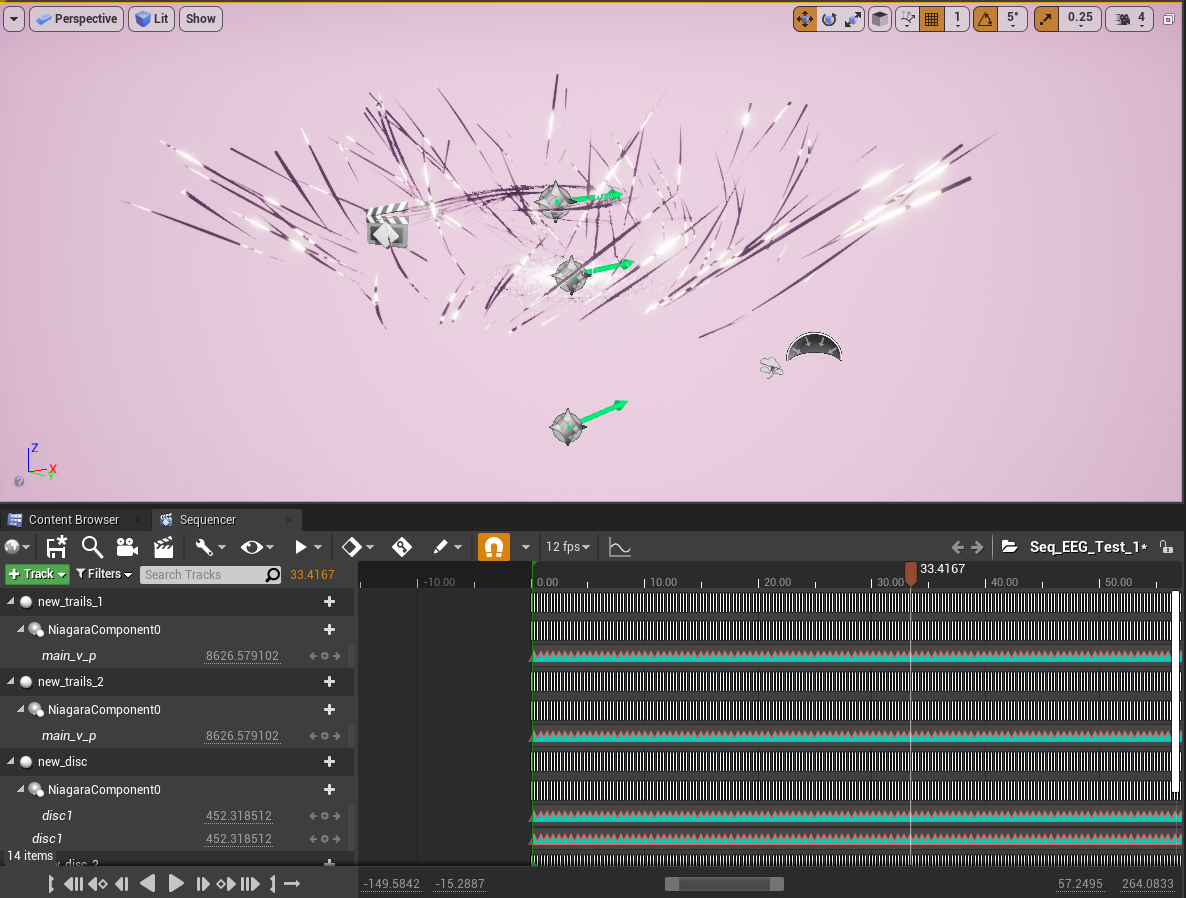

A unique element of the performance was the integration of brainwave EEG data. We collaborated with EEG visual artist Bora Aydintug to collect and interpret Miyavi’s brain waves while he meditated on the future of the world. These visualized brain waves appeared during the performance as a special moment in the show, where Miyavi will call upon his fans to join him in healing the world with their united wills.

Getting EEG data into Unreal Engine for playback and visual interpretation was an extensive process. The raw EEG data provides a selection of dozens of real-time variables that needed to be processed with our own formulas. To bring the data into Unreal Engine, we turned the raw data (recorded by Miyavi) into animation data passed into Unreal via an FBX container and then re-interpreted as spatial data vectors (x,y,z), intensity and added noise. The data was then brought into sequencer and used to manipulate a Niagara System directly (see image above right).

A small excerpt of the raw EEG data. We dealt with over 4000 frames that needed cleanup and about 50 raw variables.

The resulting Niagara System using EEG data